About Me

I am a Data Scientist with over 4 years of experience, specializing in AI, Machine Learning, Generative AI, and MLOps. My expertise lies in building scalable machine learning systems that drive real business impact, automating workflows, and optimizing AI solutions for various industries, including Healthcare, Logistics, Sales, and Customer Support.

From predictive modeling and LLM applications to cloud-based AI deployments and robust MLOps pipelines, I thrive at the intersection of technology and business strategy. I've had the privilege of working with industry leaders like Motorola, Caliber Home Loans, and Logitech, ensuring that AI-driven solutions create measurable value.

With a strong focus on agile collaboration, I have led AI/ML teams on various projects, working closely with cross-functional teams to bridge the gap between data science and business objectives. My experience in client interactions has helped me translate complex AI solutions into actionable insights that drive strategic decision-making.

Beyond work, I'm passionate about playing badminton and reading articles on emerging AI trends. I believe in continuous learning and innovation, always exploring new frontiers in AI and automation.

Let's connect and bring AI-driven ideas to life!

Skills

Experience

Education

Certifications

- Areas of Expertise

• Machine Learning & AI | Conversational AI & NLP Solutions | MLOps & Model Deployment | Data Modeling & Feature Engineering | GenAI & FinTech Solutions - Languages

• Python, SQL, C/C++, Java, Bash - Machine Learning

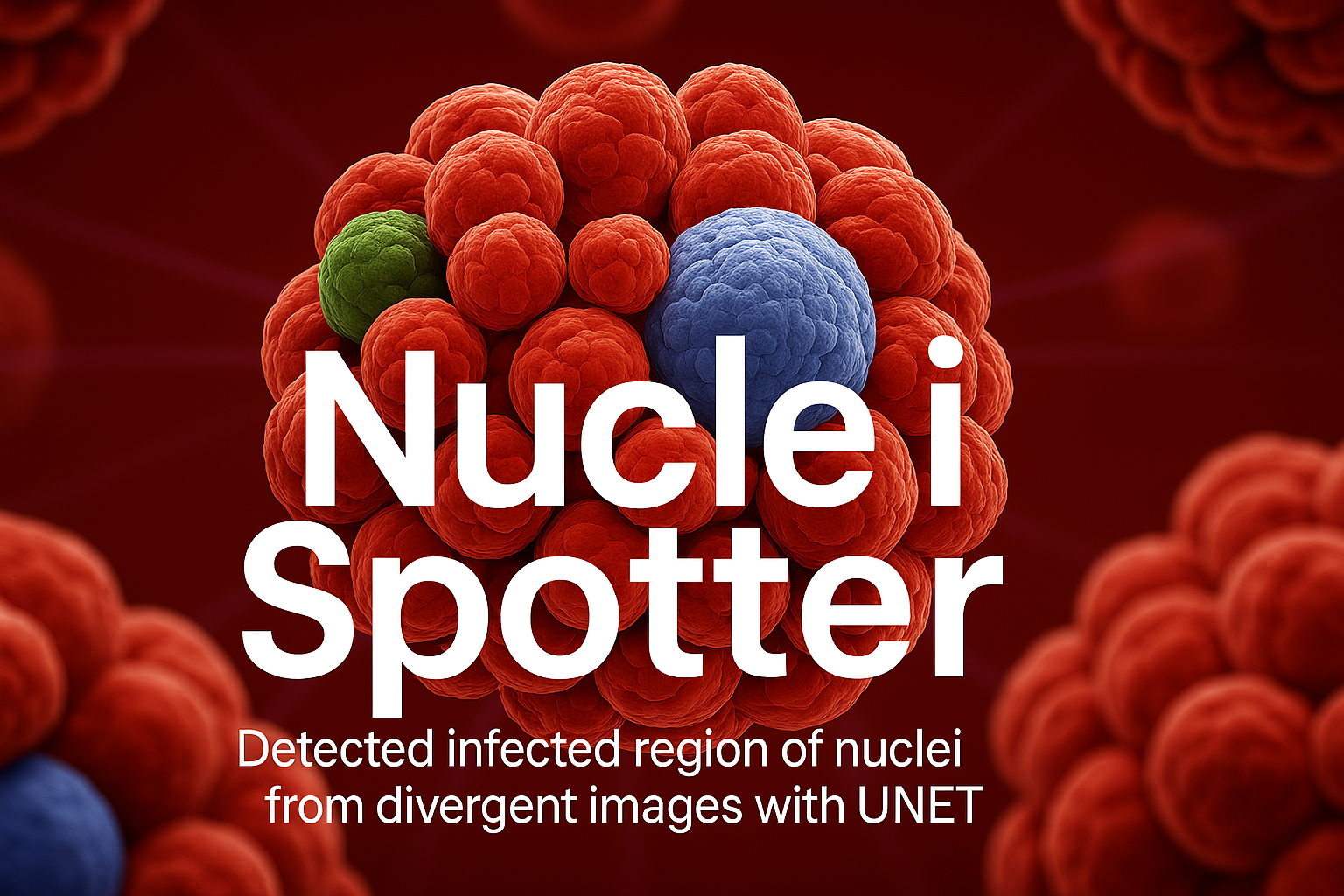

• Classification, Regression, t-SNE, PCA, CNN, UNet, OpenCV, NLTK, SpaCy - Frameworks

• Sciki-learn, TensorFlow, PyTorch, Keras, SciPy, XGBoost, Hyperopt, Pandas, NumPy, Vaex, Dask, PySpark, MLflow, Streamlit - Data Science

• Statistical Analysis, Data Mining, Data Wrangling, Data Drift, Concept Drift, A/B Testing, T-Test - Generative AI

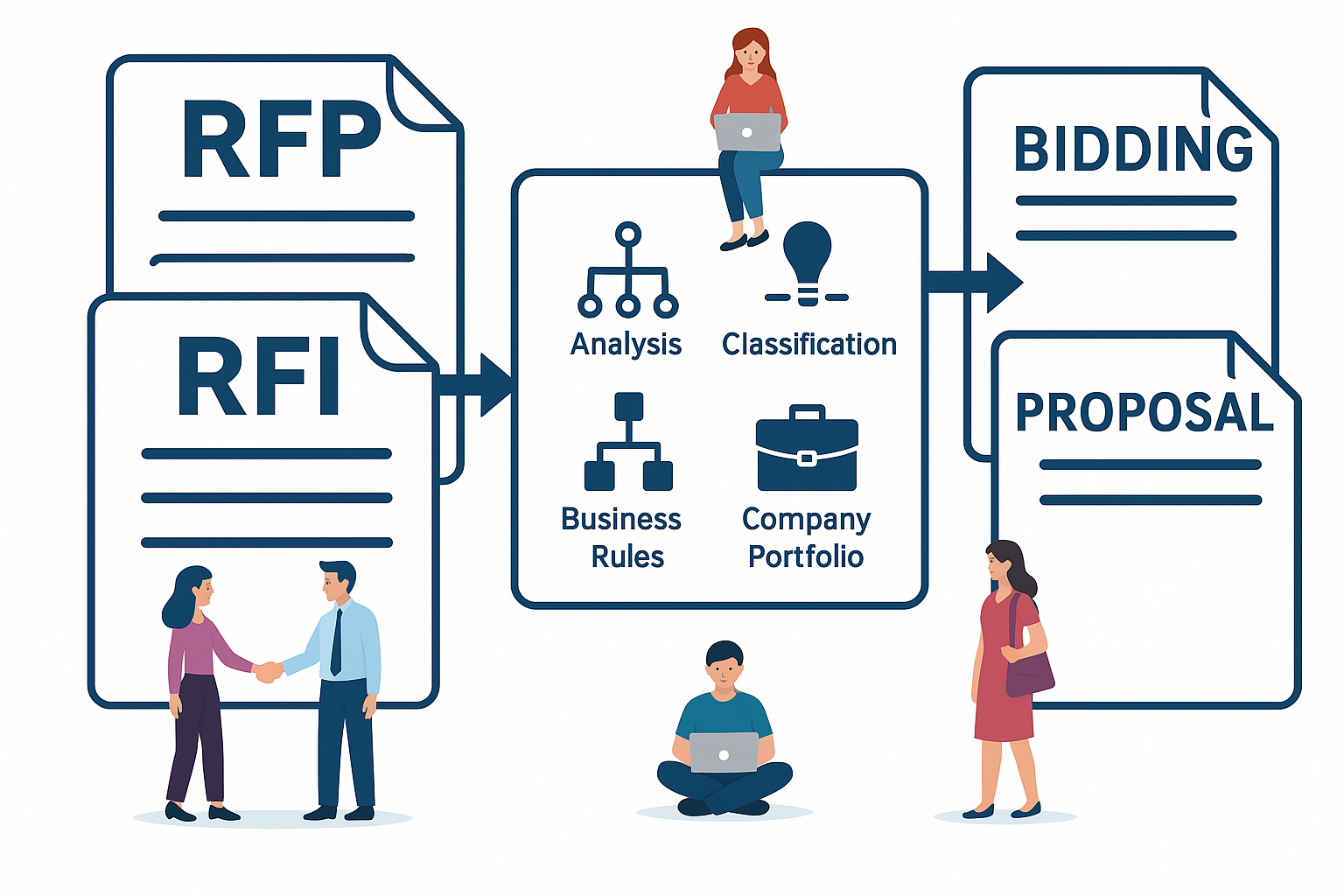

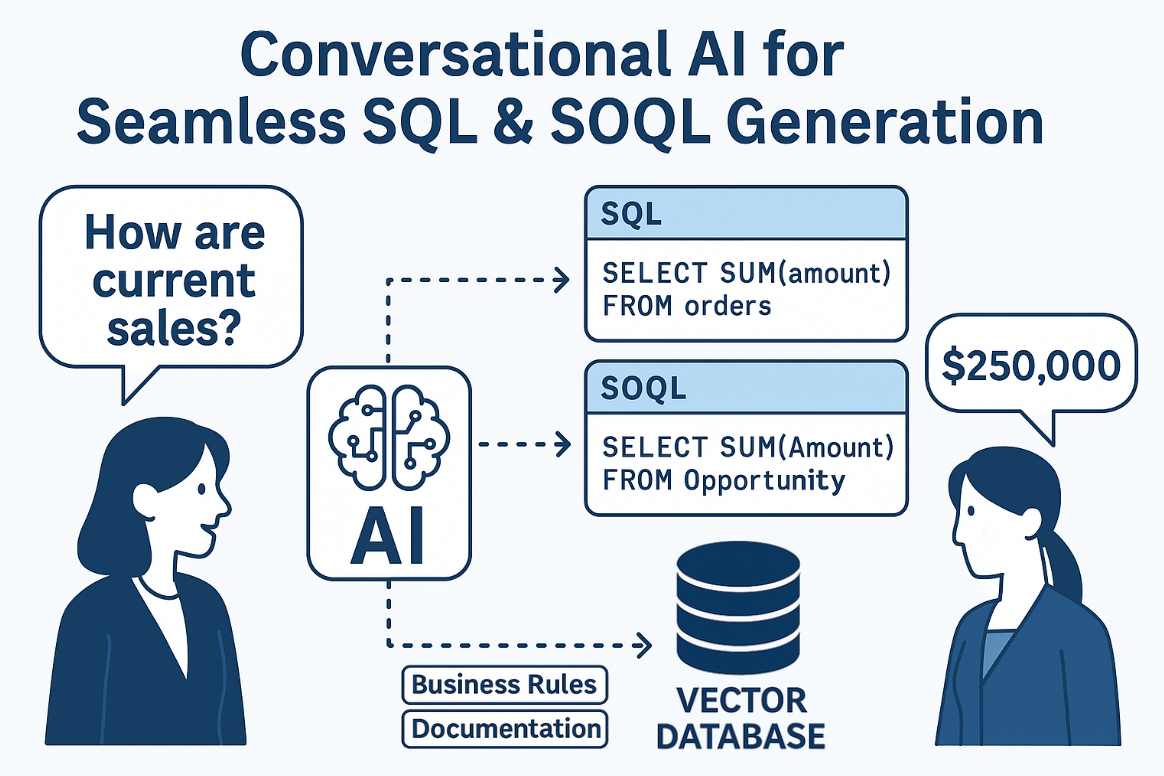

• OpenAI, Ollama, Deepseek, Cohere, Mistral, Gemini, Microsoft Phi, Vertax, LangChain, LlamaIndex, HuggingFace, Transformers, RAG (Naive, Rerank, Agentic), LangGraph, NL2SQL, Vector Database (Chroma, Qdrant, Faiss, Pinecone), PEFT, LoRA, DSPy, Explainable AI, Prompt Engineering, Bias Mitigation, Ragas - Database Management

• MySQL, SQL Server, PostgreSQL, MongoDB, Snowflake, Kafka, Salesforce SOQL, Oracle, Elasticsearch, MariaDB, NoSQL - Data Visulization

• Power BI, Matplotlib, Seaborn, Plotly, Dash - Cloud & DevOps

• AWS, Azure, GCP, Sagemaker, Bedrock, Databricks, Hadoop, AWS Lambda, Serverless, Docker, Kubernetes, Airflow, Git, Jenkins, SSH, Github Actions, Infrastructure-as-Code

- Mar 2022 - Present (Giggso Inc.)

Data Scientist - Dec 2020 - Mar 2022 (Giggso Inc.)

Junior Data Scientist - Aug 2018 - Feb 2021 (Fast NUCES University)

Teaching Assistant - Oct 2019 - Sep 2020 (Fast NUCES University)

Full Stack Intern

- National University of Computer & Emerging Sciences (NUCES)

Bachelor's of Computer Science - Government Graduate College

Intermediate in Computer Science